Abstract

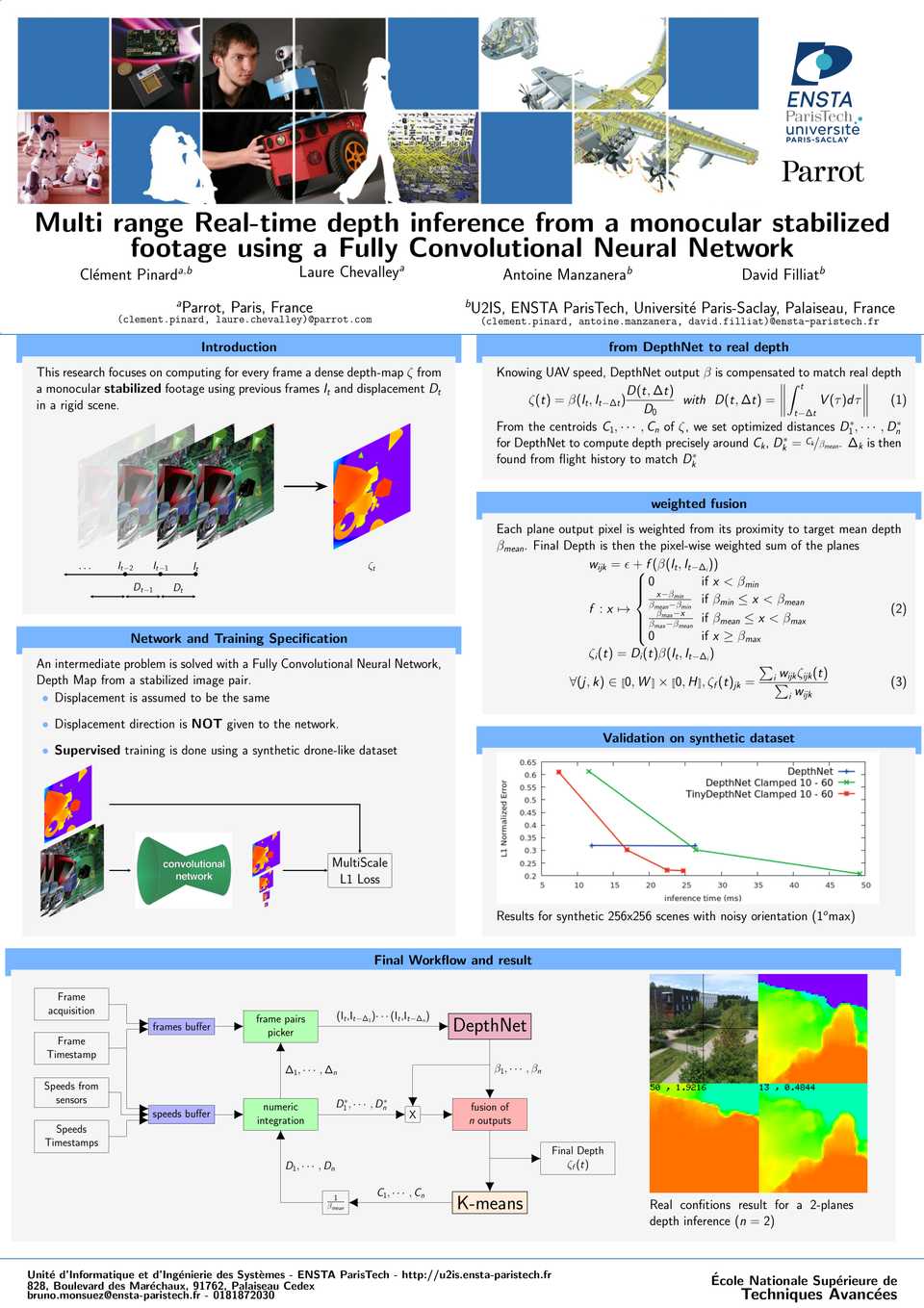

We propose a depth map inference system from monocular videos based on a novel dataset for navigation that mimics aerial footage from gimbal stabilized monocular camera in rigid scenes. Unlike most navigation datasets, the lack of rotation implies an easier structure from motion problem which can be leveraged for different kinds of tasks such as depth inference and obstacle avoidance. We also propose an architecture for end-to-end depth inference with a fully convolutional network. Results show that although tied to camera inner parameters, the problem is locally solvable and leads to good quality depth prediction.

we also propose a multi-range architecture for unconstrained UAV flight, leveraging flight data from sensors to make accurate depth maps for uncluttered outdoor environment. We try our algorithm on both synthetic scenes and real UAV flight data. Quantitative results are given for synthetic scenes with a slightly noisy orientation, and show that our multi-range architecture improves depth inference.

Papers

Two papers were published for this project

Additional Results

Code

Training code is available on Github

Still Box Dataset

The Still Box Dataset has been used to train our network. It is available to download here. It consists in 4 different image sizes. Here is a brief recap of sizes

| Image Size | number of scenes | total size (GB) | compressed size (GB) |

|---|---|---|---|

| 64x64 | 80K | 19 | 9.8 |

| 128x128 | 16K | 12 | 7.1 |

| 256x256 | 3.2K | 8.5 | 5 |

| 512x512 | 3.2K | 33 | 19 |

Get more information on the official website : https://stillbox.ensta.fr/

Citation

If you use DepthNet in your research, please add the following references.

@Article{depthnet_uavg,

AUTHOR = {Pinard, Cl{\'e}ment and Chevalley, Laure and

Manzanera, Antoine and Filliat, David},

TITLE = {end-to-end depth from motion with

stabilized monocular videos},

JOURNAL = {ISPRS Annals of Photogrammetry,

Remote Sensing and Spatial Information Sciences},

VOLUME = {IV-2/W3},

YEAR = {2017},

PAGES = {67--74},

DOI = {10.5194/isprs-annals-IV-2-W3-67-2017}

}@inproceedings{depthnet_ecmr,

TITLE = {{Multi range Real-time depth inference from a monocular stabilized

footage using a Fully Convolutional Neural Network}},

AUTHOR = {Pinard, Cl{\'e}ment and Chevalley, Laure

and Manzanera, Antoine and Filliat, David},

URL = {https://hal.archives-ouvertes.fr/hal-01587658},

BOOKTITLE = {{European Conference on Mobile Robotics}},

ADDRESS = {Paris, France},

ORGANIZATION = {{ENSTA ParisTech}},

YEAR = {2017},

MONTH = Sep,

KEYWORDS = {Deep CNN ; HDR ; Drone ; Depth},

PDF = {https://hal.archives-ouvertes.fr/hal-01587658/file/Article%20ECMR.pdf},

HAL_ID = {hal-01587658},

HAL_VERSION = {v1}

}