Abstract

This 3-year PhD thesis was done at ENSTA Paris. I was advised by Antoine Manzanera and David Filliat. In the context of a CIFRE (Convention Industrielle de Formation par la REcherche), I was also advised by Laure Chevalley in Parrot

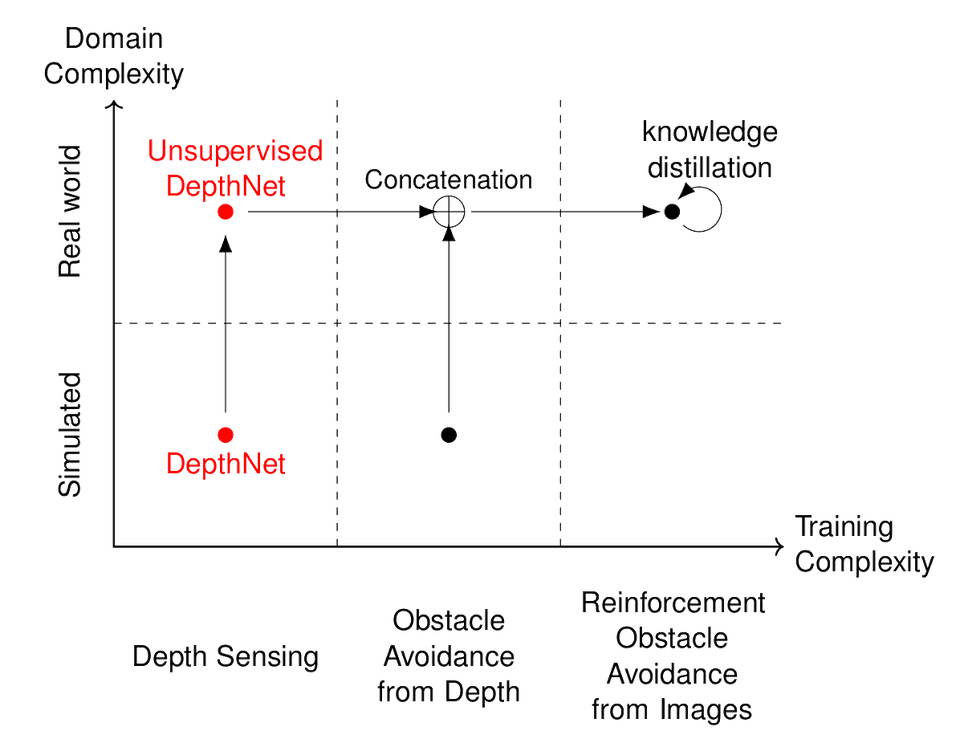

This work aims at proposing advances for obstacles avoidance for monocular drones: consumer unmanned aerial vehicles (UAV) that have only one camera. As opposed to stereo rigs that have at least two cameras. For this, we sub-divided the project in several tasks identified by task and domain complexity. Using only stabilized camera footage, the depth sensing is supposedly a simple task compared to obstacle avoidance. Our goal is to start from the botto left of the chart (see next figure) and to reach the upper right corner were we are able to train obstacle avoidance with reinforcement learning.

This figure represents the general strategy which this 3 years work is part of. The focus has been made on perception, but the end goal is to collapse the two fundamental tasks of obstacle avoidance : sense and avoid

Manuscript

Robust Learning of a depth map for obstacle avoidance with a monocular stabilized flying camera

Full Text / Defense Slides / Hal / Cite

Contributions

DepthNet

DepthNet is heavily inspired from FlowNet, but the training is made on StillBox instead of flying chairs, and the network directly outputs depth.

See dedicated page: Introducing DepthNet

Study on reconstruction based loss

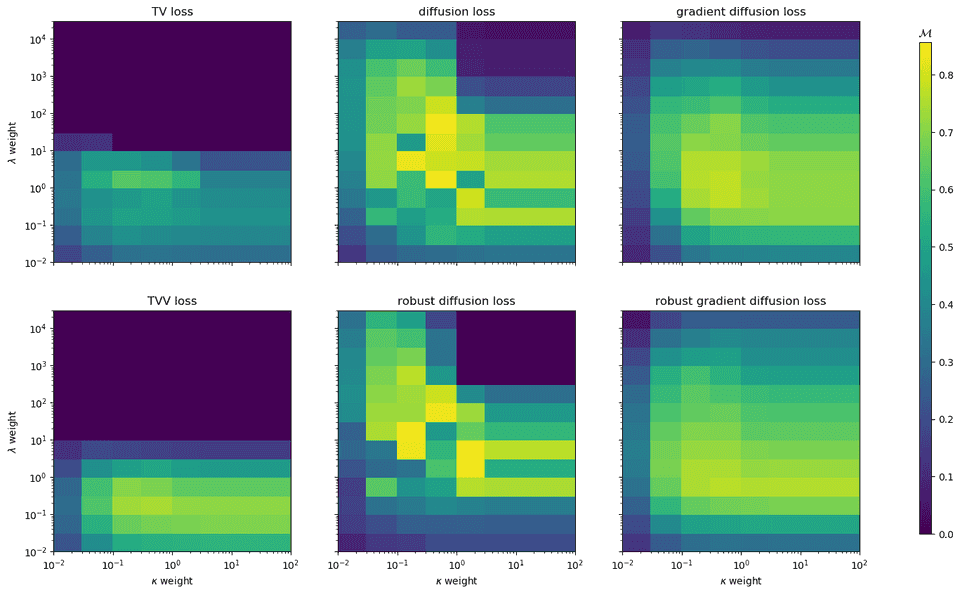

Using two toy problem, I conduct both a theoretical and experimental study on reconstruction based loss for auto supervision. Indeed, most recent papers suffer two main problems:

- They rely too much on KITTI benchmark. This tends to bias our vision of what works better or not

- The different loss functions they introduce, which are based on the classic photometric loss + smooth loss duo are only motivated by empirical results and thus biased toward a single dataset.

This unfortunately tells little about the accuracy on another dataset. This is espcially the case for this Thesis were drone videos are dramatically different from in-car videos.

|

|

|

|

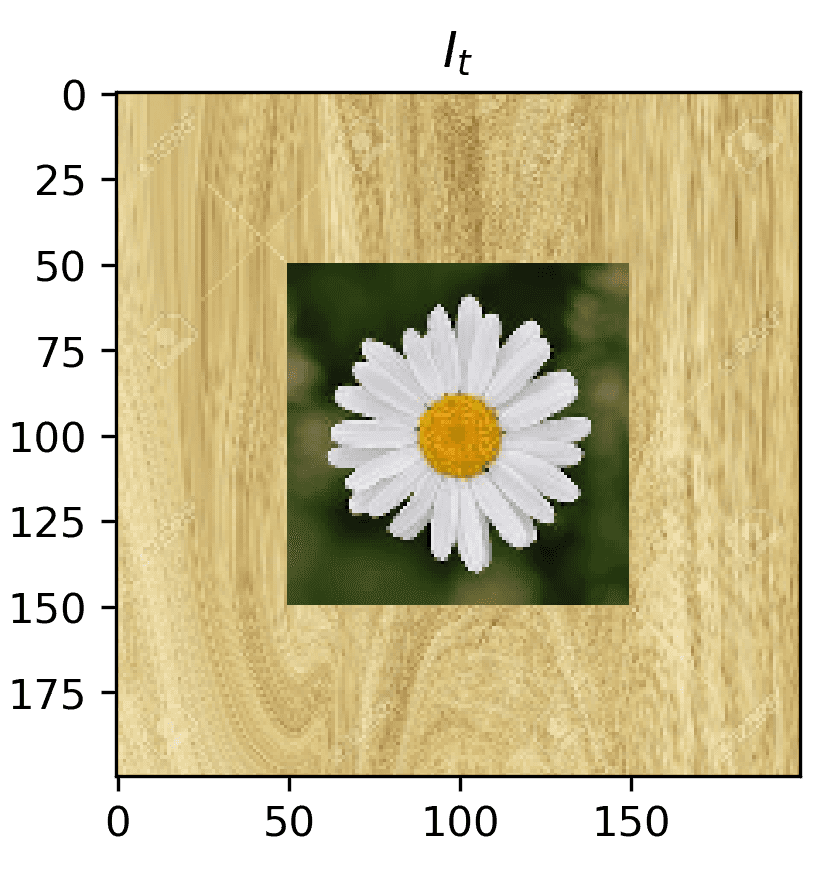

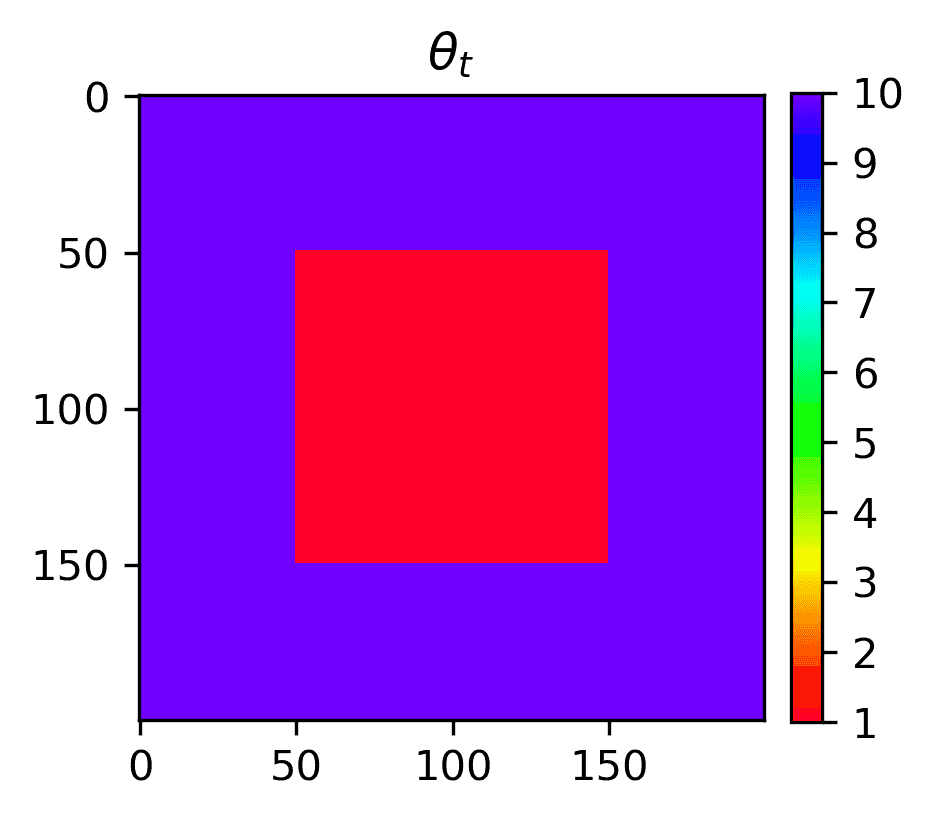

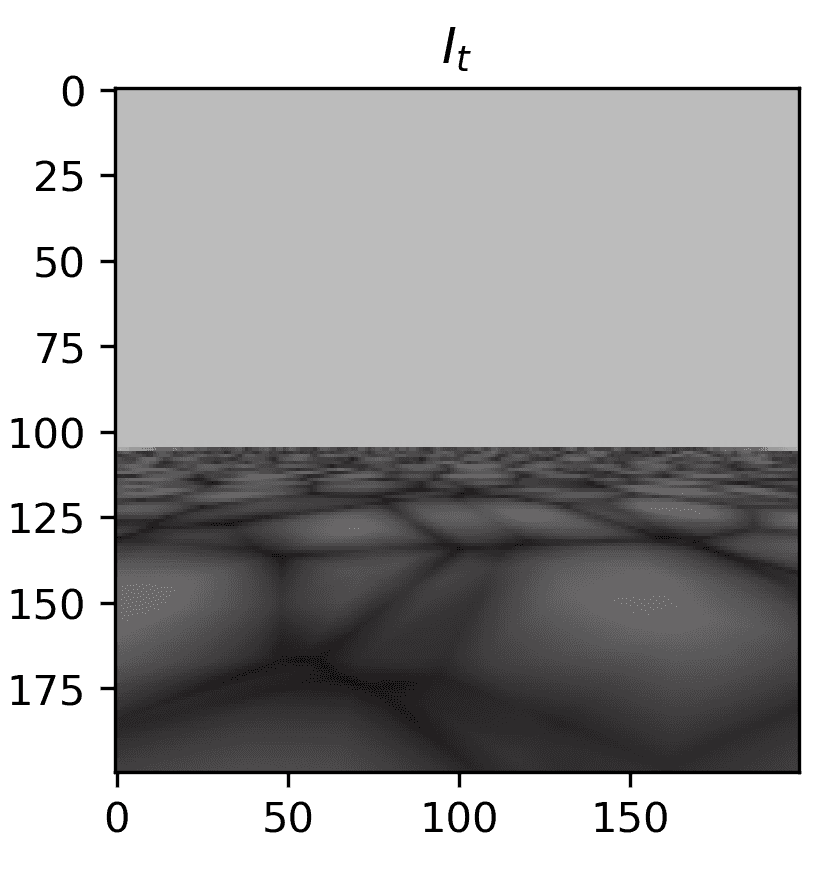

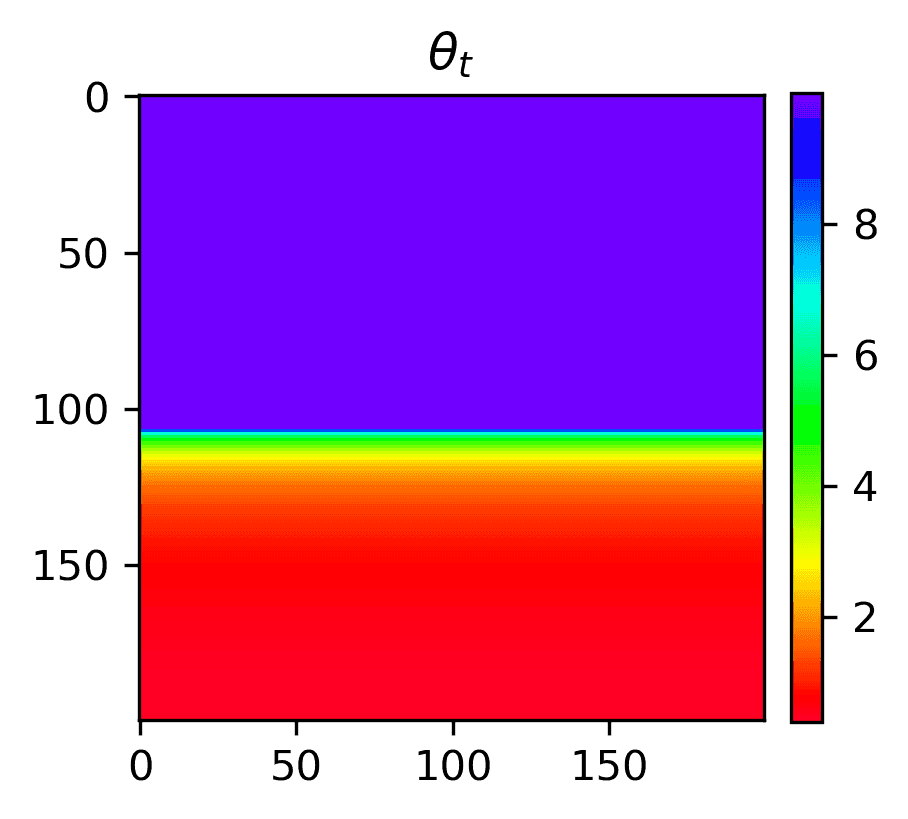

The two toy problems considered in the study, along with the final combined accuracy for several smooth loss functions. x and y axis are and , two hyperparameters for the smooth loss function. This shows that even though the lowest error is reached with diffusion loss, the landscape is much noisier than gradient diffusion loss and thus less robust to a different dataset. See this github repo to reproduce these figures.

This part focuses on loss functions used in litterature for auto supervision for depth training. Our goal is to understand the implication of each loss function with respect to gradient. That way, we try to have more insight than empirical results to try new loss terms.

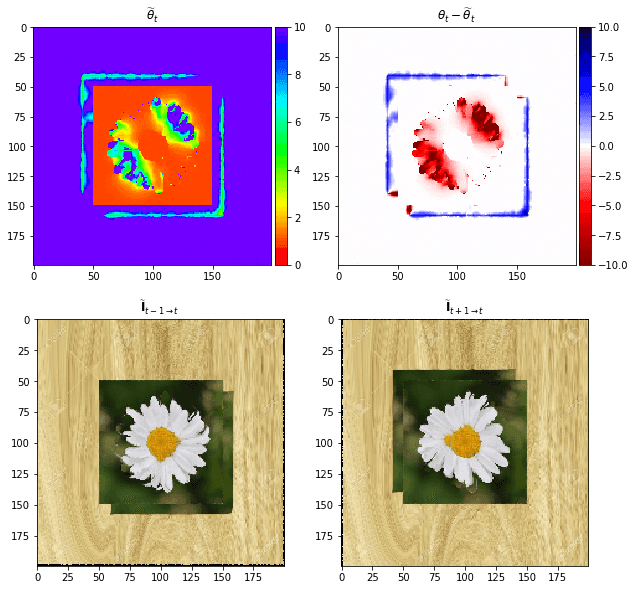

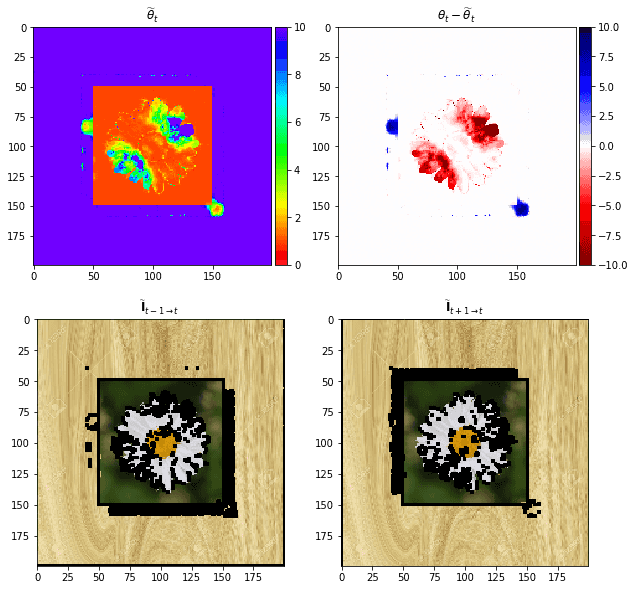

Occlusion detection

I introduced a new module for direct warping. This module is not designed for reprojection based optimization but to filter out occlusion.

See Dedicated Github for more details.

This occlusion filtering is particularly interesting for datasets with lots of occlusons (which is not the case for KITTI).

|

|

Result of reprojection based optimization for the flow example without (left) and with (right) occlusion filteringing. black area in the reconstructed frames are the discarded pixels. You can see that without occlusion filtering a ghost area around the foreground is visible and the result with filtering is much sharper. See this github repo to reproduce these figures.

Application on Unsupervised DepthNet

See dedicated page Learning structure-from-motion from motion

Using DepthNet for obstacle avoidance

From DepthNet to real depth

As every depth from motion algorithm, the scale factor remains to be solved. For a UAV with flight sensors, it is easy thanks to measured speed. I introduced a way of using depthnet to have a potentially infinite range for depth, by carefully selecting frames that are spatially close or far apart. For more information, see dedicated page, Introducing DepthNet, and especially the second paper: Multi range Real-time depth inference from a monocular stabilized footage using a Fully Convolutional Neural Network

Proof of concept on a Parrot Bebop Drone

With the help of Parrot, I designed a proof of concept of an obstacle avoidance system using DepthNet. The network is used to compute depth from a stabilized video and streamed back to the obstacle avoidance algorithm, using Model Predictive Control

Defense

The defense took place on 06/26/2019 at ENSTA. The Jury was composed of Yann Gousseau (Jury president), Patrick Pérez (Reviewer), Nicolas Thome (Reviewer), Pascal Monasse (examinator), Laure Chevalley (PhD co-advisor from Parrot), David Filliat (PhD co-advisor) and Antoine Manzanera (PhD director).

The whole defense has been recorded in this video (in french). Slides can be downloaded here (see above)

Citation

If you used results from this thesis for your work, please add the following reference.

@phdthesis{pinard:tel-02285215,

TITLE = {{Robust Learning of a depth map for obstacle avoidance with a monocular stabilized flying camera}},

AUTHOR = {Pinard, Cl{\'e}ment},

URL = {https://pastel.archives-ouvertes.fr/tel-02285215},

NUMBER = {2019SACLY003},

SCHOOL = {{Universit{\'e} Paris Saclay (COmUE)}},

YEAR = {2019},

MONTH = Jun,

TYPE = {Theses},

PDF = {https://pastel.archives-ouvertes.fr/tel-02285215/file/77151_PINARD_2019_archivage.pdf},

HAL_ID = {tel-02285215},

HAL_VERSION = {v1},

}